Improving the performance of a Hugo site

Table of Contents

With a static site, all the web-server has to do is respond to requests with files on the disk. No generating content, no databases and no API calls. Therefore when looking at performance, the main aim should be delivering those files to the user as fast as possible.

The main bottleneck is typically the network connection to the end-user, so making the files as small as possible is a key way to reduce loading times and improve the user’s experience.

Minification #

Much of the information required to display a website is sent in plain text formats, including HTML, CSS and Javascript. As a developer or web designer, it is beneficial to layout the text in a readable manner, using spaces, tabs, newlines and comments, even when the format does not require it. From a browser’s perspective, the whitespace characters make no difference, maybe even slowing down the parsing and network transfer. Removing these excess characters can significantly reduce the file size.

For Javascript and other formats containing variables, another way to save bytes is to rename the relatively long and descriptive variable names into much shorter ones, ideally a single character.

Both these improvements are known as minification. For example, here is an uninspiring sample of Javascript that creates a function then calls it:

// Function to print sum of numbers

function print_sum(number1, number2){

var sum = number1 + number2

console.log(sum)

}

// Assign variables

var x = 5

var y = 7

// Print sum

print_sum(x,y)Using a Javascript minifier, unused characters are removed and local variable names are shrunk and the size is reduced from 204 to 76 bytes:

function print_sum(n,o){o=n+o;console.log(o)}var x=5,y=7;print_sum(x,y);Hugo #

These optimisations can be achieved using asset minification in Hugo. If you are writing a theme, this can be included as part of Hugo pipes asset processing. If you are using somebody elses theme, you can check the output files to see if it has been used (they should have .min in the filename).

If HTML minification is not part of the theme, it can also be included using the - - minify build flag.

File compression #

After the contents of the files have been minimised, the next step is compression. Compression changes the binary encoding of the data contained within the file to reduce the total size. The most common and well supported compression format is gzip, with brotli from Google also gaining in popularity. See this page for a detailed description and comparison.

Pre-Compression #

Since the site is serving static content, all the files can be compressed in advance. The compressed file is stored alongside the original so the webserver can choose between the compressed and original depending on the client browser’s compatability.

A linux command to compress all HTML, CSS and Javascript files using gzip and save them with the .gz extension looks like this:

find /path/to/webroot -type f \( -name '*.html' -o -name '*.js' -o -name '*.css'\) -exec gzip -v -k -f --best {} \\;The -k flag keeps the original file, -f overwrites the output file if it exists and --best performs the best possible compression. Since the compression is performed in advance, and decompression speeds don’t really vary with level, there isn’t really a reason not to select the best possible compression.

Other files that you may have that could benefit from compression are XML and SVG, which could be added the command.

After installing brotli, the same command can be used, swapping gzip for brotli. The snippet below is from the makefile I use for my website. Running make compress from the command line after building the site compresses all the files using both gzip and brotli, saving the corresponding .gz and .br files.

# Find command to get compressible content

FINDFILES=find ${DESTDIR} -type f \( -name '*.html' -o -name '*.js' -o -name '*.css' -o -name '*.xml' -o -name '*.svg' \)

...

# Create gzip and brotli compressed content

.PHONY: compress

compress:

${FINDFILES} -exec gzip -v -k -f --best {} \;

${FINDFILES} -exec brotli -v -k -f --best {} \;

Caddy Config #

To enable Caddy to serve the compressed files, add the precompressed directive, followed by one or more of gzip, br or zstd. If multiple compressed files are available, Caddy will respond to the one favoured by the browser, as specified in the request header. Below is an example of my Caddyfile.

domwil.co.uk {

# Webroot

root * /var/www/html

# Enable the static file server.

file_server {

precompressed br gzip

}

}Impact of compression #

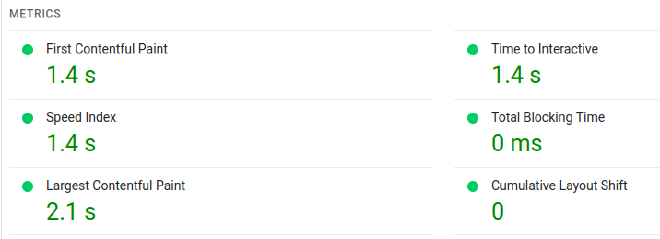

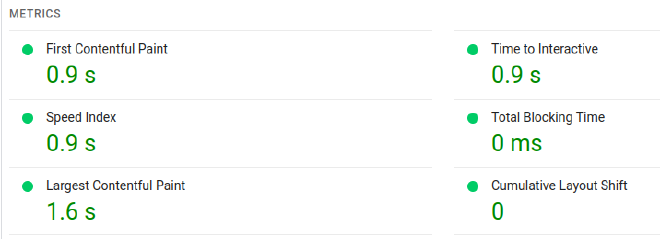

Using Pagespeed Insights based on Google Lighthouse, the performance of the site (as well as other important aspects) can be tested. The below images show how the load time of this website’s homepage has improved by 0.5 second just by compressing text files.

Compressing on the fly #

Caddy, and any other popular webserver, can also be configured to compress files on demand. This doesn’t really make sense for static content, but can be beneficial for dynamic content. There will always be a trade-off between the transfer time saved by compressing the file, and the time and CPU effort taken to compress it, and this will vary with compression level.

The Caddy directive to achieve this is encode, but I won’t cover it here.

Caching #

The quickest way to deliver content is to not deliver it at all. This is entirely possible if the user has previously received the requested file, the browser has saved it and the server side copy has not changed in the meantime. The method of controlling this behavior is the Cache-Control header.

For our use case, the max-age directive is the main interest in the Cache-Control header. The webserver sets this when responding to a request which tells the browser how long it should still consider the content to be valid. It doesn’t mean the browser won’t ask for the content again (as its cache size is limited), but it should reduce the load on the server and speed up loading times for multiple visits to the site. The following Caddyfile tells the server to set the the max-age to 1 week for all requests:

domwil.co.uk {

...

# add cache control header for browser caching

header Cache-Control max-age=604800

}This is great, but what if a file on the server is modified? The client’s browser might remember the old version for up to a week before getting the updated version.

Cache busting #

Cache busting is the process of renaming a resource each time a change it made to it. For example, the original version of the stylesheet could be named mystyle.v1.css, and included in the html header like this:

...

<head>

<link rel="stylesheet" href="mystyle.v1.css">

</head>

...mystyle.v1.css may then be cached for as long as specified in the Cache-Control header. Making a CSS change, renaming to mystyle.v2.css and updating the HTML to:

...

<head>

<link rel="stylesheet" href="mystyle.v2.css">

</head>

...Now, assuming that the html is not cached, the old resource is no longer requested by the page, and the browser must request the updated resource.

Hugo #

Cache busting can be included automatically in Hugo. Instead of version numbers, the file hash is used in the file name. The added benefit of this is that Subresource Integrity can be used, which verifies that content has not been tampered with. More info on the Fingerprinting and SRI page.

Caddy #

To complete the Caddy configuration, the Cache-Control header with max-age is added to all resources that contain the cache busting hash. For the theme I am using, this is the Javascript, CSS and image files. This uses a regex Request Matcher.

domwil.co.uk {

...

# cache match for js, css and any images with cache busting hash

@cache path_regexp \/(js\/.*\.js|css\/.*\.css|posts\/.*\.(jpg|png|jpeg|gif|webp))$

# add cache control header for cached files

header @cache Cache-Control max-age=604800

}Server-side caching #

Files can also be cached on the server. Instead of reading the file from the disk for every request, the file is stored in RAM after the first request so that subsequent requests can be served faster.

In practice, the operating system will handle this, so it is unnecessary for the webserver to perform any caching. Explained in this thread.

Conclusion #

Minification and compression are easy ways to reduce file size and improve site performance. The former can be added within a hugo theme, the latter with a one-line command and some webserver configuration.

Another large contributor to network transfer time is images. This fanstastic blog post has a great comparison of web image formats, as is something I would like to investigate in the future.